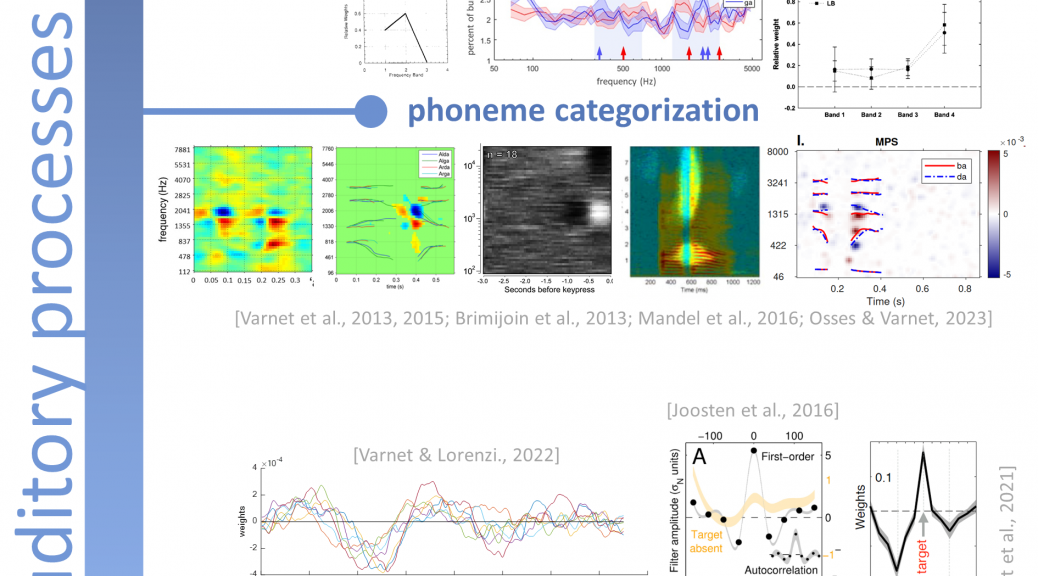

One of the main focuses of my research lies in the development and implementation of a new psycholinguistic approach, purely behavioral in nature, known as Auditory Classification Images (ACI). This approach draws inspiration from reverse correlation studies in vision psychophysics, which aimed to link random fluctuations in a stimulus to the corresponding perceptual response of a participant in a task, on a trial-by-trial basis. The objective of this project is to adapt and operationalize the reverse correlation technique for the auditory modality, with the ultimate goal of applying it to the exploration of speech perception, particularly phoneme comprehension. Auditory Classification Images enable us to visualize the « listening strategy » of a participant, revealing the information they extract from the auditory stimulus to perform the task.

The Matlab toolbox that we develop is available as a Github repository : https://github.com/aosses-tue/fastACI. It can be used to replicate previous auditory revcorr experiments or to design new ones.

With this toolbox you can run listening experiments as used in the studies below. You can also reproduce most of the figures contained in the mentioned references. The toolbox was also used to replicate Ahuma et al.’s seminal experiment (Ahumada, Marken & Sandusky, 1975). Experiments can be conducted on human participants through headphones, or on auditory models (Osses & Varnet, 2021; see also Osses & Varnet 2024)

| REFERENCE | fastACI experiment name | TASK | Type of background noise | Target sounds |

|---|---|---|---|---|

| Ahumada, Marken & Sandusky, 1975 | replication_ahumada1975 |

tone-in-noise detection | white | 500-Hz pure tone vs. silence |

| Varnet & Lorenzi 2022 | modulationACI |

amplitude modulation detection | white | modulated or unmodulated tones |

| Varnet et al. 2013 |

speechACI_varnet2013 |

phoneme categorization | white | /aba/-/ada/, female speaker |

| Varnet et al. 2015, 2016a, 2016b | speechACI_varnet2015 |

phoneme categorization | white | /alda/-/alga/-/arda/-/arga/, male speaker |

| Osses & Varnet 2021 | speechACI_varnet2013 |

phoneme categorization | speech shaped noise (SSN) | /aba/-/ada/, female speaker |

| Osses & Varnet 2024 | speechACI_Logatome |

phoneme categorization | white, bump, MPS | /aba/-/ada/, male speker from the OLLO database |

| Carranante et al., 2023, 2024. |

speechACI_Logatome |

phoneme categorization | bump | Pairs of contrasts using /aba/, /ada/, /aga/, /apa/, /ata/ from the same male speaker (S43M) in OLLO |

| Osses et al., 2023 | segmentation |

word segmentation | random prosody | Pairs of contrasts: /l’amie/-/la mie/, /l’appel/-/la pelle/, /l’accroche/-/la croche/, /l’alarme/-/la larme/ |

Administrative details

Collaborators: Alejandro Osses

Fundings:

NSCo Doctoral School, L. Varnet’s thesis scholarship (2012-2015)

Agence Nationale de la Recherche, projet ANR-20-CE28-0004 « Exploration des représentations phonétiques et de leur adaptabilité par la méthode des Images de Classification Auditive rapides » (2021-2023)

Selected publications and presentations:

English

Presentation of the auditory revcorr method

General overview of the toolbox

About the use of auditory models within the toolbox

Articles using the toolbox or reimplemented within the toolbox (see table above)

Français

Varnet, L. (2015). Identification des indices acoustiques utilisés dans la compréhension de la parole dégradée. Université Claude Bernard Lyon 1. (thèse)

Varnet, L. Présentation à la Fête de la Science (2020, ENS Paris): « Observer l’esprit humain sans neuroimagerie » (vidéo)

« Visualiser ce que nous écoutons pour comprendre les sons de parole » (vidéo)

Sur ce blog :

– L’image de classification auditive, partie 1 : Le cerveau comme boîte noire

– L’Image de Classification Auditive, partie 2 : À la recherche des indices acoustiques de la parole

– A visual compendium of auditory revcorr studies